If this is your first time on this question, I suggest reading the pre-update part below first, then this part. Here's a synthesis of the problem, though:

Basically, I have a collision detection and resolution engine with a grid spatial partitioning system where order-of-collision and collision groups matter. One body at a time must move, then detect collision, then resolve collisions. If I move all the bodies at once, then generate possible collision pairs, it is obviously faster, but resolution breaks because order-of-collision isn't respected. If I move one body a time, I'm forced to get bodies to check collisions, and it becomes a n^2 problem. Put groups in the mix, and you can imagine why it gets very slow very fast with a lot of bodies.

Update: I've worked really hard on this, but couldn't manage to optimize anything.

I successfully implemented the "painting" described by Will and changed groups into bitsets, but it is a very very minor speedup.

I also discovered a big issue: my engine is order-of-collision dependent.

I tried an implementation of unique collision pair generation, which definitely speed up everything by a lot, but broke the order-of-collision.

Let me explain:

in my original design (not generating pairs), this happens:

- a single body moves

- after it has moved, it refreshes its cells and gets the bodies it collides against

- if it overlaps a body it needs to resolve against, resolve the collision

this means that if a body moves, and hits a wall (or any other body), only the body that has moved will resolve its collision and the other body will be unaffected.

This is the behavior I desire.

I understand it's not common for physics engines, but it has a lot of advantages for retro-style games.

in the usual grid design (generating unique pairs), this happens:

- all bodies move

- after all bodies have moved, refresh all cells

- generate unique collision pairs

- for each pair, handle collision detection and resolution

in this case a simultaneous move could have resulted in two bodies overlapping, and they will resolve at the same time - this effectively makes the bodies "push one another around", and breaks collision stability with multiple bodies

This behavior is common for physics engines, but it is not acceptable in my case.

I also found another issue, which is major (even if it's not likely to happen in a real-world situation):

- consider bodies of group A, B and W

- A collides and resolves against W and A

- B collides and resolves against W and B

- A does nothing against B

- B does nothing against A

there can be a situation where a lot of A bodies and B bodies occupy the same cell - in that case, there is a lot of unnecessary iteration between bodies that mustn't react to one another (or only detect collision but not resolve them).

For 100 bodies occupying the same cell, it's 100^100 iterations! This happens because unique pairs aren't being generated - but I can't generate unique pairs, otherwise I would get a behavior I do not desire.

Is there a way to optimize this kind of collision engine?

These are the guidelines that must be respected:

Pre-update:

Foreword: I'm aware that optimizing this bottleneck is not a necessity - the engine is already very fast. I, however, for fun and educational purposes, would love to find a way to make the engine even faster.

I'm creating a general-purpose C++ 2D collision detection/response engine, with an emphasis on flexibility and speed.

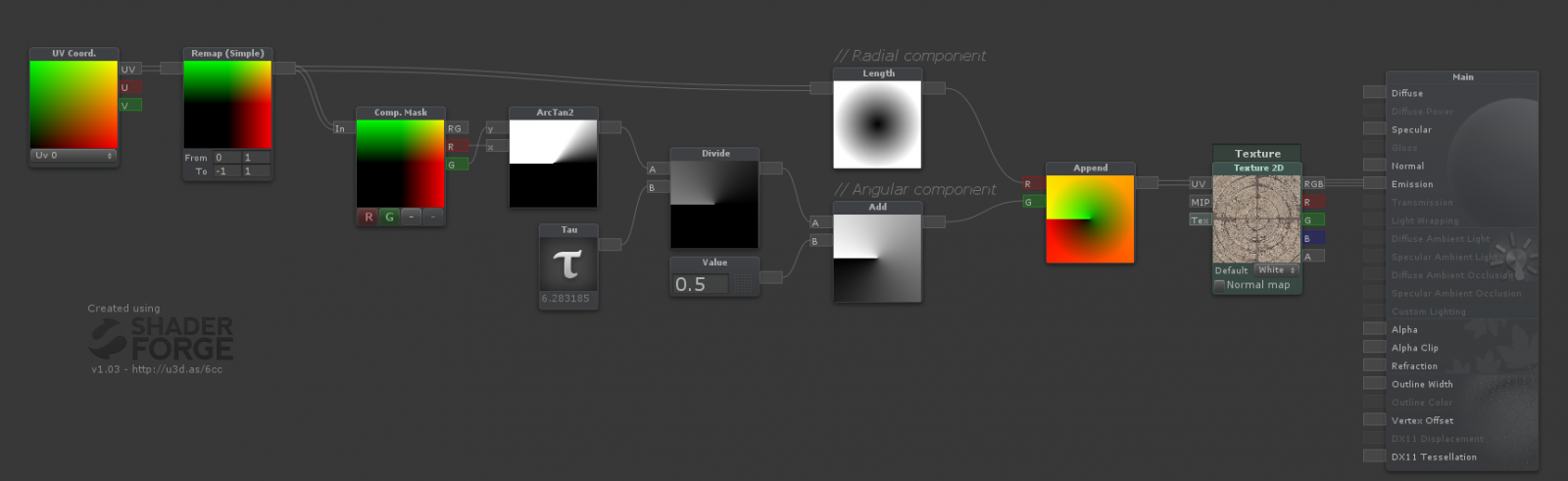

Here's a very basic diagram of its architecture:

Basically, the main class is World, which owns (manages memory) of a ResolverBase*, a SpatialBase* and a vector.

SpatialBase is a pure virtual class which deals with broad-phase collision detection.

ResolverBase is a pure virtual class which deals with collision resolution.

The bodies communicate to the World::SpatialBase* with SpatialInfo objects, owned by the bodies themselves.

There currenly is one spatial class: Grid : SpatialBase, which is a basic fixed 2D grid. It has it's own info class, GridInfo : SpatialInfo.

Here's how its architecture looks:

The Grid class owns a 2D array of Cell*. The Cell class contains a collection of (not owned) Body*: a vector which contains all the bodies that are in the cell.

GridInfo objects also contain non-owning pointers to the cells the body is in.

As I previously said, the engine is based on groups.

Body::getGroups() returns a std::bitset of all the groups the body is part of.

Body::getGroupsToCheck() returns a std::bitset of all the groups the body has to check collision against.

Bodies can occupy more than a single cell. GridInfo always stores non-owning pointers to the occupied cells.

After a single body moves, collision detection happens. I assume that all bodies are axis-aligned bounding boxes.

How broad-phase collision detection works:

Part 1: spatial info update

For each Body body:

- Top-leftmost occupied cell and bottom-rightmost occupied cells are calculated.

- If they differ from the previous cells,

body.gridInfo.cells is cleared, and filled with all the cells the body occupies (2D for loop from the top-leftmost cell to the bottom-rightmost cell).

body is now guaranteed to know what cells it occupies.

Part 2: actual collision checks

For each Body body:

body.gridInfo.handleCollisions is called:

void GridInfo::handleCollisions(float mFrameTime)

{

static int paint{-1};

++paint;

for(const auto& c : cells)

for(const auto& b : c->getBodies())

{

if(b->paint == paint) continue;

base.handleCollision(mFrameTime, b);

b->paint = paint;

}

}

void Body::handleCollision(float mFrameTime, Body* mBody)

{

if(mBody == this || !mustCheck(*mBody) || !shape.isOverlapping(mBody->getShape())) return;

auto intersection(getMinIntersection(shape, mBody->getShape()));

onDetection({*mBody, mFrameTime, mBody->getUserData(), intersection});

mBody->onDetection({*this, mFrameTime, userData, -intersection});

if(!resolve || mustIgnoreResolution(*mBody)) return;

bodiesToResolve.push_back(mBody);

}

So, I've been trying to optimize this broad-phase collision detection for quite a while now. Every time I try something else than the current architecture/setup, something doesn't go as planned or I make assumption about the simulation that later are proven to be false.

My question is: how can I optimize the broad-phase of my collision engine?

Is there some kind of magic C++ optimization that can be applied here?

Can the architecture be redesigned in order to allow for more performance?

Callgrind output for latest version: http://txtup.co/rLJgz

getBodiesToCheck()

There could be two problems with the getBodiesToCheck() function; first:

if(!contains(bodiesToCheck, b)) bodiesToCheck.push_back(b);

This part is O(n2) isn't it?

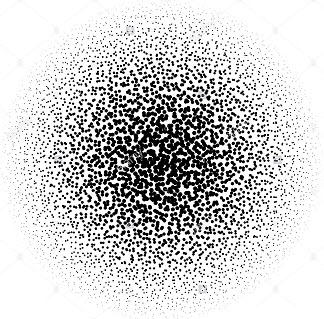

Rather than checking to see if the body is already in the list, use painting instead.

loop_count++;

if(!loop_count) { // if loop_count can wrap,

// you just need to iterate all bodies to reset it here

}

bodiesToCheck.clear();

for(const auto& q : queries)

for(const auto& b : *q)

if(b->paint != loop_count) {

bodiesToCheck.push_back(b);

b->paint = loop_count;

}

return bodiesToCheck;

You are dereferencing the pointer in the gather phase, but you'd be dereferencing it in the test phase anyway so if you have enough L1 its no big deal. You can improve performance by adding pre-fetch hints to the compiler too e.g. __builtin_prefetch, although that is easier with classic for(int i=q->length; i-->0; ) loops and such.

That's a simple tweak, but my second thought is that there could be a faster way to organise this:

You can move to using bitmaps instead, though, and avoiding the whole bodiesToCheck vector. Here's an approach:

You are using integer keys for bodies already, but then looking them up in maps and things and keeping around lists of them. You can move to a slot allocator, which is basically just an array or vector. E.g.:

class TBodyImpl {

public:

virtual ~TBodyImpl() {}

virtual void onHit(int other) {}

virtual ....

const int slot;

protected:

TBodyImpl(int slot): slot(slot_) {}

};

struct TBodyBase {

enum ... type;

...

rect_t rect;

TQuadTreeNode *quadTreeNode; // see below

TBodyImpl* imp; // often null

};

std::vector bodies; // not pointers to them

What this means is that all the stuff needed to do the actual collisions is in linear cache-friendly memory, and you only go out to the implementation-specific bit and attach it to one of these slots if there's some need to.

To track the allocations in this vector of bodies you can use an array of integers as a bitmap and use bit twiddling or __builtin_ffs etc. This is super efficient to move to slots that are currently occupied, or find an unoccupied slot in the array. You can even compact the array sometimes if it grows unreasonably large and then lots are marked deleted, by moving those on the end to fill in the gaps.

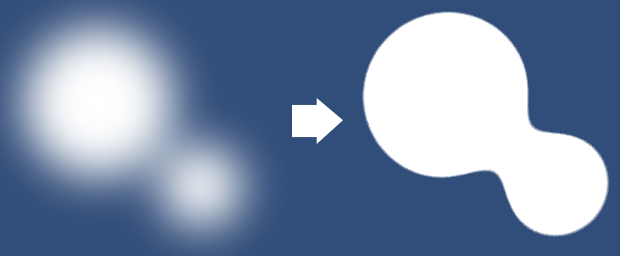

only check for each collision once

If you've checked if a collides with b, you don't need to check if b collides with a too.

It follows from using integer ids that you avoid these checks with a simple if-statement. If the id of a potential collision is less-than-or-equal to the current id being checked for, it can be skipped! This way, you'll only ever check each possible pairing once; that'll more than half the number of collision checks.

unsigned * bitmap;

int bitmap_len;

...

for(int i=0; i unsigned mask = bitmap[i];

while(mask) {

const int j = __builtin_ffs(mask);

const int slot = i*sizeof(unsigned)*8+j;

for(int neighbour: get_neighbours(slot))

if(neighbour > slot)

check_for_collision(slot,neighbour);

mask >>= j;

}

respect the order of collisions

Rather than evaluating a collision as soon as a pair is found, compute the distance to hit and store that in a binary heap. These heaps are how you typically do priority queues in path-finding, so is very useful utility code.

Mark each node with a sequence number, so you can say:

- A10 hits B12 at 6

- A10 hits C12 at 3

Obviously after you've gathered all the collisions, you start popping them from the priority queue, soonest first. So the first you get is A10 hits C12 at 3. You increment each object's sequence number (the 10 bit), evaluate the collision, and compute their new paths, and store their new collisions in the same queue. The new collision is A11 hits B12 at 7. The queue now has:

- A10 hits B12 at 6

- A11 hits B12 at 7

Then you pop from the priority queue and its A10 hits B12 at 6. But you see that A10 is stale; A is currently at 11. So you can discard this collision.

Its important not to bother trying to delete all stale collisions from the tree; removing from a heap is expensive. Simply discard them when you pop them.

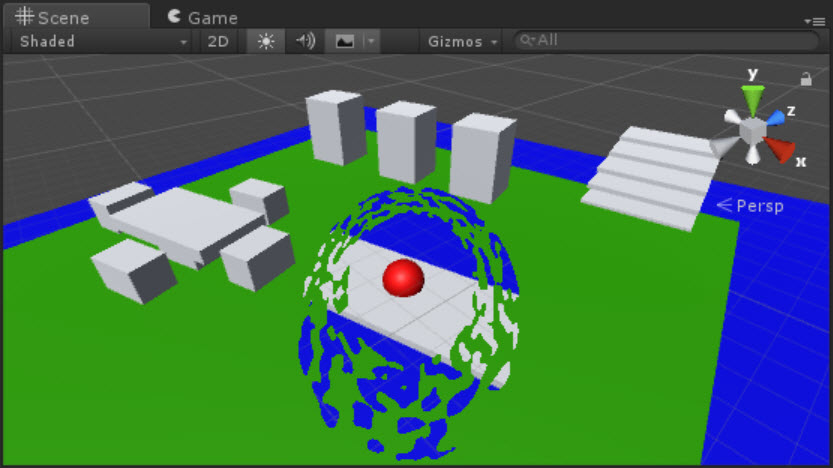

the grid

You should consider using a quadtree instead. Its a very straightforward data-structure to implement. Often you see implementations that store points but I prefer to store rects, and store the element in the node that contains it. This means that to check collisions you only have to iterate over all bodies, and, for each, check it against those bodies in the same quad-tree node (using the sorting trick outlined above) and all those in parent quad-tree nodes. The quad-tree is itself the possible-collision list.

Here's a simple Quadtree:

struct Object {

Rect bounds;

Point pos;

Object * prev, * next;

QuadTreeNode * parent;

};

struct QuadTreeNode {

Rect bounds;

Point centre;

Object * staticObjects;

Object * movableObjects;

QuadTreeNode * parent; // null if root

QuadTreeNode * children[4]; // null for unallocated children

};

We store the movable objects separately because we don't have to check if the static objects are going to collide with anything.

We are modeling all objects as axis-aligned bounding boxes (AABB) and we put them in the smallest QuadTreeNode that contains them. When a QuadTreeNode a lot of children, you can subdivide it further (if those objects distribute themselves into the children nicely).

Each game tick, you need to recurse into the quadtree and compute the move - and collisions - of each movable object. It has to be checked for collisions with:

- every static object in its node

- every movable object in its node that is before it (or after it; just pick a direction) in the movableObjects list

- every movable and static object in all parent nodes

This will generate all possible collisions, unordered. You then do the moves. You have to prioritise these moves by distance and 'who moves first' (which is your special requirement), and execute them in that order. Use a heap for this.

You can optimise this quadtree template; you don't need to actually store the bounds and centre-point; that's entirely derivable when you walk the tree. You don't need to check if a model is within the bounds, only check which side it is of the centre-point (a "axis of separation" test).

To model fast flying things like projectiles, rather than moving them each step or having a separate 'bullets' list that you always check, simply put them in the quadtree with the rect of their flight for some number of game steps. This means that they move in the quadtree much more rarely, but you aren't checking bullets against far off walls, so its a good tradeoff.

Large static objects should be split into component parts; a large cube should have each face separately stored, for example.

you can see this shader in

you can see this shader in